Mixing sounds is a way of manipulating sound, handling the essential elements of sounds. Whenever you try to manipulate a sound there are two ways to go:

- Either the proven trial and error principle: You play around with some knobs, listen to the results and decide if you like it. If you use the trial and error principle you may get something special but you may not really know how or what it makes so special. Playing around often lets you forget about the things you did to get there. Obviously it helps to take notes.

- Else you can follow a structured approach where you more or less know what you are doing and have a final result in mind that you want to achieve. If you try a structured approach the previous design and thought process may consume your creative energy. And this could demotivate you or make you invest too much time in the prep work and let you exhausted when it gets to be creative. So be careful with too much thinking on what you want to do before actually doing anything.

When we start to produce sounds or even music it’s essential to know some of the basic properties of sound because what we do really is manipulating basic properties of sound as there are propagation, amplitude, frequency and timbres.

Anatomy of a sound

A sound from a physical point of view is an acoustic event vibrating in a certain frequency and on any elastic medium where it can propagate. The basic elements of a sound are it’s physical properties. These are complex properties that can be manipulated to create different kinds of sounds. And they are what we can mix together to create sound effects of all kinds. The basic properties of a sound are its frequency, its amplitude, its timbre and its propagation. Frequency determines the pitch of the sound, amplitude is about the loudness, timbre is about the “sound” and propagation is about how it spreads. We should be conscious that all the principles of sounds are actually completely independent from each other. We can therefore manipulate certain parts of sound to create sound effects of all kinds just by changing single sound properties.

Sound frequency

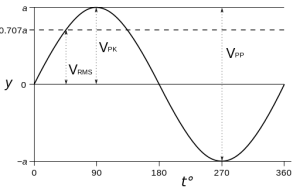

The frequency of a sound is determined by the times per second it oscillates. This value is measured in Hertz (Hz). It is defined as the number of cycles per second of a periodic phenomenon. The lowest frequency of a sound is it’s basic frequency. The rest of related frequencies are based on this ground wave and are called partial tones or harmonic tones. Each musical instrument has different timbres based on the different overtones the basic sounds generate. With sound based primary on frequency, sound elements can be described and graphed using the graph of their frequency curve, that is their waveform. One of its most common uses is the description of the sine wave. The simplest form of a wave is the sine wave with its harmonic and equal sided waveform. From a math point of view, a sine wave is a curve that describes a smooth repetitive oscillation.

Sounds based on sine waves are often titled as “pure” tones. This means that regardless of other characteristic properties such as amplitude the wave consists of a single frequency. A sine wave is characterized by its frequency, the number of cycles per second or its wavelength, the distance the waveform travels through its medium within a period and the amplitude, the size of each cycle. A pure sine wave is an artificial sound. A pure tone has the unique property that its wave shape and sound are changed only in amplitude and phase by linear acoustic systems. Sine waves are generally uncomfortable to the ear. Sound localization is often more difficult with sine waves than with other sounds.

When sinus-waves are made audible, the amplitude and frequency determine how we perceive the sound. They determine the volume and pitch of a tone. Frequency is heard as the pitch of a tone. The audible range is between 20Hz and 20 kiloherz. Frequency dominates our sense of high pitch and low pitch. Frequency and Pitch really relate to how fast the sound is vibrating. Frequency is something a computer can measure, whereas pitch is something that we perceive.

Syntheziser often include sinus wave generation together with some other basic simple wave forms like saw tooth, triangle and square waves. In the end they all are based on sinus shapes, but it makes work easier for electronic musicians.

[list] [li][row][column lg=”6″ md=”12″ sm=”12″ xs=”12″ ] Sine wave with a frequency of 440 Hz (musical “A”) [/column][column lg=”6″ md=”12″ sm=”12″ xs=”12″ ] [/toggle] [/column] [/row] [/li] [li][row][column lg=”6″ md=”12″ sm=”12″ xs=”12″ ] Square wave at 3500 Hz [/column][column lg=”6″ md=”12″ sm=”12″ xs=”12″ ] [/column] [/row] [/li] [li][row][column lg=”6″ md=”12″ sm=”12″ xs=”12″ ] Triangle wave with a frequency of 220 Hz [/column][column lg=”6″ md=”12″ sm=”12″ xs=”12″ ] [/column] [/row] [/li] [li][row][column lg=”6″ md=”12″ sm=”12″ xs=”12″ ] Sawtooth wave with a frequency of 440 Hz [/column][column lg=”6″ md=”12″ sm=”12″ xs=”12″ ] [/column] [/row] [/li] [/list]

Sound vs Noise

Acoustic events that are formed by theoretically unlimited individual waves with minimal frequency distance to each other are called “noise”. Noise has a non periodical frequencey. Noise waves can’t be predicted, as they have no repetitive patterns. There are plenty of noise types that can be defined by their intensity, timbre and rhythm despite still being a noise. The white noise and pink noise for example are typical noises used for mixing.

Timbre

Almost all natural acoustic events differ from a sinus wave. Sine and cosine waves are just the most basic building blocks of more complex waves. As additional frequencies are combined, the waveform transforms into a more complex shape. Every acoustic event that follows a different waveform than the sinus waveform is formed by overlapping sinus waves in different amplitudes and frequencies. The frequencies of these individual waves are related to each other in a full numbers relation. This is what we call and perceive as “sound”. Every instrument has energy at multiple frequencies, these are the “harmonics“. We have effects related to frequency. But they don’t really relate directly to frequency very much, they’re more related to is timbre. And timbre is the collection of sound in multiple frequencies. The term overtones or partials describes the timbre. The relation of the partials or overtones of a sound determines how the timbre we perceive. Sounds we talk of as “hard” or “brilliant” most probably have a high number of overtones, whereas we perceive sounds as “soft” when the overtones are more distinct and less numerous.

Sound effects related to the timbre

Audio effects that controls timbre are for example equalizers and filters. An equalizer is a collection of filters. Anytime you give an amplitude at a specific frequency that’s going to be a filter and it’s manipulating the timbre.

Sound Propagation

Propagation is how we describe the sounds moving through a medium. Sound can extend through air, metal, wood or water. And in each one of those mediums it actually goes at a different rate or speed. It does take time for sound to get from one place to another, generally about 340 meters per second. Propagation for sound can be an air column like in a flute, that transmits the vibrations of its molecules to their neighbours, thus expanding in the room. As the air molecules follows different patterns it changes the air pressure and create what we know as the wave of a sound. Our brain is capable to distinguish the arrival times of sound. This capability allows us to locate sounds coming from left or right. Manipulation of delay times can change our sense of sounds moving through the air.

Sound effects related to propagation

Every surface will reflect differently the sound from an instrument or another sound source. The sense of space is based sound reflecting off objects and taking the time to get there in a different amount of time from all those different surfaces. What we do in a sound mix related to propagation is based on creating a sense of space and a depth and a real location. As the reverb of waves may be different from on room to another, sound bouncing off a surface with different propagation can simulate different room effects. Sound effects like delay, reverb, phasers and flangers actually relate to propagation manipulation and are acountable for spatial effects.

Amplitude of sound

The amplitude relates to the volume of a sound. If frequency and pitch are related to the length of the wave, the amplitude could be seen as the thickness or width of the sound wave. Graphically we can describe it as how wide a sound wave compresses the air as it moves in longitudinal waves. Rarefaction is the way that the air gets lesser with each swing of the wave. That is what we perceive as louder or quieter. A high amplitude makes a sound louder. Amplitude is measured in decibels. Decibel is a relative measure. We measure amplitude in many places.

- In the air we use the measure Decibels of Sound Pressure Level, aka dBSPL. dBSPL is related to the threshold of hearing, so a dBSPL of 0 it’s the quietest thing we can hear in the air.

- In digital environments the amplitude is measures at full scale with dBFS, with a 0 being the loudest thing that can be represented in the computer and going backwards in the scale to quietness.

Therefore in most mixing software and hardware you see negative DB scales, but positive DB scales if you’re measuring loudness in the air. Technically amplitude and loudness are different things, though in common language use we often don’t distinguish them. Amplitude can be measured, while loudness is an individual perception of volume. Amplitude is a primary function of mixing. We control the amplitudes of our tracks making them relative to each other. This way we sculpt a soundscape of individual sounds or tracks to a sound relief we call mix.

Sound effects related to Amplitude

The idea of panning is to control the level between the two speakers. And there are plugins controlling the amplitude of the signal over time like expanders, gates, compressors and limitors.

This is a pretty cool explanation of sound basics! I never really thought about the science behind sound effects before, but this makes it easy to understand. I might actually try playing around with some of these concepts now!